Today we share a fun little Huawei bug that adds a twist to our previous forays into Neural Networking-based exploitation of Android devices. In previous posts, we have shown that the Neural Networking features of modern Android devices can lead to serious - if quite traditional - vulnerabilities. This time, we present a vulnerability in which Machine Learning is not the culprit - but the tool we use to actually exploit a seemingly minor permission misconfiguration issue!

Introduction

This time last year while auditing vendor-specific filesystem node access rights, we’ve spotted an SELinux permission misconfiguration issue that, at first, looked somewhat innocuous: all untrusted applications could access a sysfs-based log file of condensed haptic event statistics. However, after reverse engineering the format of these logs and applying some simple ML tricks, we managed to extract precise touchscreen event patterns surreptitiously from untrusted application contexts. The proof-of-concept we have created shows that through this vulnerability a malicious application could steal sensitive user-data by continuously listening in the background for touchscreen events and inferring the user input from the observed events. Specifically, our investigation shows that the amount of information that can be extracted is sufficient to reconstruct user input on PIN-pads and even on virtual on-screen keyboards to a high degree.

Sidenote: as the research was done a year ago, obviously Huawei has not only fixed the issue at hand (CVE-2021-22337) - but also upgraded lot of their devices to HarmonyOS from Android by now. So don’t expect to find the same exact implementation details on your up-to-date Huawei device.

Can Touch This: touchscreen event logging on Huawei’s Android devices

The touchscreen of Huawei smartphones is handled via the tpkit module, which can be found at drivers/devkit/tpkit/ in the published kernel sources.

There are multiple low-level drivers implemented for different touchscreen vendors, but the high-level driver on Nova 5T (YAL), Mate 30 Pro (LIO) and P40 Pro (ELS) all used the one defined in the hostprocessing/huawei_thp* files.

This huawei_thp driver creates some virtual control files under the /sys/devices/platform/huawei_thp/ path. Here is an excerpt of the file list with detailed permissions and SELinux labels:

HWLIO:/ $ ls -alZ /sys/devices/platform/huawei_thp

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 aod_touch_switch_ctrl

-rw-rw---- root root u:object_r:sysfs_touchscreen:s0 charger_state

--w------- system system u:object_r:sysfs_touchscreen:s0 easy_wakeup_control

-rw------- system system u:object_r:sysfs_touchscreen:s0 easy_wakeup_gesture

-r-------- system system u:object_r:sysfs_touchscreen:s0 easy_wakeup_position

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 fingerprint_switch_ctrl

-r--r--r-- root root u:object_r:sysfs_touchscreen:s0 hostprocessing

-r--r--r-- root root u:object_r:sysfs_touchscreen:s0 loglevel

-r--r--r-- root root u:object_r:sysfs_touchscreen:s0 modalias

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 power_switch_ctrl

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 ring_switch_ctrl

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 roi_data

-r--r--r-- root root u:object_r:sysfs_touchscreen:s0 roi_data_internal

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 roi_enable

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 stylus_wakeup_ctrl

-r-------- system system u:object_r:sysfs_touchscreen:s0 supported_func_indicater

-r--r--r-- root root u:object_r:sysfs_touchscreen:s0 thp_status

-rw-r----- system system u:object_r:sysfs_touchscreen:s0 touch_chip_info

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 touch_glove

-rw-r----- system system u:object_r:sysfs_touchscreen:s0 touch_oem_info

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 touch_sensitivity

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 touch_switch

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 touch_window

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 tp_dfx_info

-rw-rw---- root root u:object_r:sysfs_touchscreen:s0 tui_wake_up_enable

-rw-rw---- system system u:object_r:sysfs_touchscreen:s0 udfp_enable

-rw-r--r-- root root u:object_r:sysfs_touchscreen:s0 uevent

-rw------- system system u:object_r:sysfs_touchscreen:s0 wakeup_gesture_enable

Now let’s focus on the roi_data_internal node, which is owned by the root user, but still readable for others as well.

As we can see, most of the other nodes of the huawei_thp kernel module have no file permissions for the “other” role, so regardless of SELinux, the discretionary access control (the traditional Unix permission system) prevents access.

But roi_data_internal is an exception. It is worth identifying where that comes from.

The sysfs file permissions are assigned programatically. Here is the relevant part of the drivers/devkit/tpkit/hostprocessing/huawei_thp_attr.c file (taken from the LIO kernel source):

static DEVICE_ATTR(loglevel, S_IRUGO, thp_loglevel_show, NULL);

static DEVICE_ATTR(charger_state, (S_IRUSR | S_IRGRP | S_IWUSR | S_IWGRP),

thp_host_charger_state_show, thp_host_charger_state_store);

static DEVICE_ATTR(roi_data, (S_IRUSR | S_IRGRP | S_IWUSR | S_IWGRP),

thp_roi_data_show, thp_roi_data_store);

static DEVICE_ATTR(roi_data_internal, @\highlight{yellow}{S\_IRUGO}@, thp_roi_data_debug_show, NULL);

static DEVICE_ATTR(roi_enable, (S_IRUSR | S_IRGRP | S_IWUSR | S_IWGRP),

thp_roi_enable_show, thp_roi_enable_store);

static DEVICE_ATTR(touch_sensitivity, (S_IRUSR | S_IRGRP | S_IWUSR | S_IWGRP),

thp_holster_enable_show, thp_holster_enable_store);

As we can see above, the roi_data_internal device attributes are defined with S_IRUGO. This is the problematic line of code that opened up the vulnerability.

However the picture is not complete without looking at mandatory access control as well. As Huawei smartphones utilize SELinux, it is important to examine the sysfs_touchscreen file label from the SELinux policy database which can be found at /vendor/etc/selinux/precompiled_sepolicy. Downloading the database and querying each rule that allows a read action on the sysfs_touchscreen target context results in more than a thousand output lines. (The query was run with sesearch -A -t sysfs_touchscreen -p read precompiled_sepolicy.) Among the results we can find allow rules for untrusted_app context!

...

allow untrusted_app sysfs_touchscreen:dir { getattr ioctl lock open read search };

allow untrusted_app sysfs_touchscreen:file { getattr ioctl lock map open read };

allow untrusted_app sysfs_touchscreen:lnk_file { getattr ioctl lock map open read };

...

allow shell sysfs_touchscreen:dir { getattr ioctl lock open read search };

allow shell sysfs_touchscreen:file { getattr ioctl lock map open read };

allow shell sysfs_touchscreen:lnk_file { getattr ioctl lock map open read };

...

This meant that the roi_data_internal node was accessible from any application without any permissions.

The access works on an unmodified, out-of-the-box phone, there is no need for any kind of local privilege escalation.

(As seen from the extract above, access is possible from additional contexts as well such as the shell.)

While the access control bypass vulnerability itself is readily apparent, we have to figure out with what precision it is possible to extract touchscreen usage information from this node in order to determine what security impact this issue has.

Meaning of the roi_data_internal matrix

The content of the node is provided by the thp_roi_data_debug_show function from the huawei_thp_core.c file, where ROI_DATA_LENGTH == 49.

static ssize_t thp_roi_data_debug_show(struct device *dev,

struct device_attribute *attr, char *buf)

{

int count = 0;

int i;

int ret;

struct thp_core_data *cd = thp_get_core_data();

short *roi_data = cd->roi_data;

for (i = 0; i < ROI_DATA_LENGTH; ++i) {

ret = snprintf(buf + count, PAGE_SIZE - count, "%4d ", roi_data[i]);

if (ret > 0)

count += ret;

/* every 7 data is a row */

if (!((i + 1) % 7)) {

ret = snprintf(buf + count, PAGE_SIZE - count, "\n");

if (ret > 0)

count += ret;

}

}

return count;

}

The thp_get_core_data() function call returns the g_thp_core pointer, where the underlying data gets updated by the touchscreen controllers in an interrupt-driven fashion.

Because this node operates on the very same raw data that the touchscreen kernel driver uses, a very high timing precision can be achieved just by monitoring roi_data_internal in a tight loop and looking for changes.

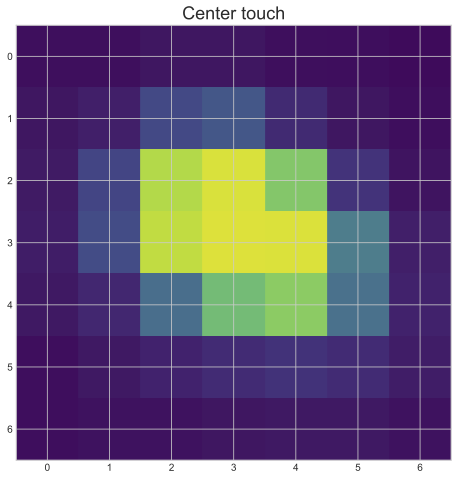

Below there is an example output of the node after briefly touching the screen represented in its original textual format and also graphically.

It can be seen that the highest value is at the center of the matrix ([3,3]), which is by-design, as that point should be the center of the estimated point of touch.

HWLIO:/ $ cat /sys/devices/platform/huawei_thp/roi_data_internal

-27 -2 -40 9 10 -23 0

-22 -13 8 134 102 13 13

-13 17 452 2017 1668 191 32

7 83 1100 3028 2668 322 50

25 58 209 859 646 106 30

-13 13 8 72 82 16 16

-9 8 0 1 29 -5 5

Example roi_data_internal output of touching by fingertip

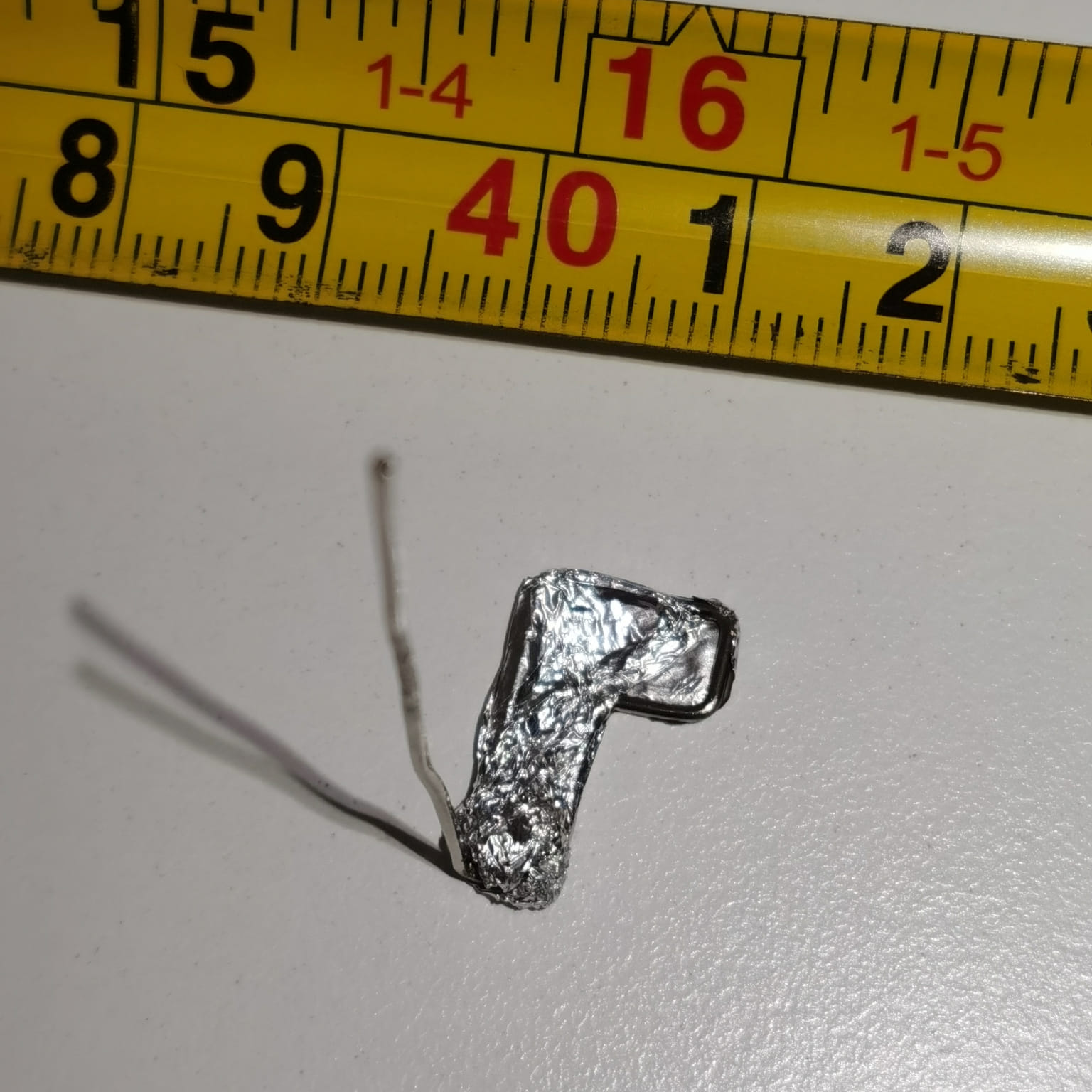

To validate the conjecture that this 7-by-7 matrix indeed represents the immediate surrounding area of the touched screen region (as opposed to the center of the matrix representing the center of the touchscreen, which is not the case), we’ve made a super sophisticated verification tool: an asymmetrical L-shaped steel paperclip covered by aluminum foil :) The foil is important as modern touchscreens use capacitive sensing, essentially detecting electron deficit on their surface caused by conductive touch events.

L-shaped paperclip experiment

As the graph of roi_data_internal clearly resembles the L-shape of the paperclip, we can conclude that the returned matrix has tight connections to the physical reality by capturing the approximately 3 cm surrounding area of the touched location.

Relation of roi_data_internal data and tapping location

One might assume that if every touch event would have the exact same physical characteristics, it would not be possible to infer location from this touchscreen data. In reality, that is not the case. When the ROI matrix would go beyond the physical size of the screen, the touchscreen driver fills the missing data points with constant zeros. That makes it very convenient to distinguish touches around particular edges of the touchscreen from other locations solely based on the ROI data.

But, of course, human touches are not going to have such constant characteristics as a theoretical high-precision robotic finger with a homogenous surface, fixed angle and power of touch, and a constant time interval between every single touch regardless of location. There are two major factors that facilitate much higher precision inference than the “fill in missing data with zeroes” behavior of the kernel driver alone would.

The first is the timing. For various reasons, timing intervals between touches will correlate to the actual key sequences in many scenarios. We note prior art in this research domain from Academia. For example, in “Practical Keystroke Timing Attacks in Sandboxed JavaScript”, Lipp et al. show that even in the much more restricted context of a sandboxed JavaScript runtime inside a browser, keystroke timing differences can be used to recover actual typed phrases. In a scenario even closer to ours, in “Timing attacks on PIN input devices”, Kune et al. use inter-keystroke timings (in their case acquired from audio, which is less precise than the timestamps we can rely on here) to better infer the keys hit on a PIN pad. Most recently, in “Keylogging Side Channels”, Monaco builds a language model of inter-key timing delays based on many datasets. It is interesting (and perhaps a little counter-intuitive) that they find that the longer the word (in terms of character count), the higher the chance of recovery, because the delay pattern becomes very distinctive.

The second aspect is the variances in the movement and positioning of the fingers. We can observe in our lives that most people hold their own phones in their hands to support the phone while simultaneously use their thumbs for accessing the touchscreen. That means the hands usually sit in a relatively fixed position while only the thumbs move over the screen. The part of the thumb which actually touches the screen has an asymmetrical imprint on the screen, and more importantly, when we rotate, bend or stretch our thumb to reach a specific region of the screen, this imprint also changes. This simple observation provides the intuition that the differences in the recorded matrix data values will actually correlate to the position touched.

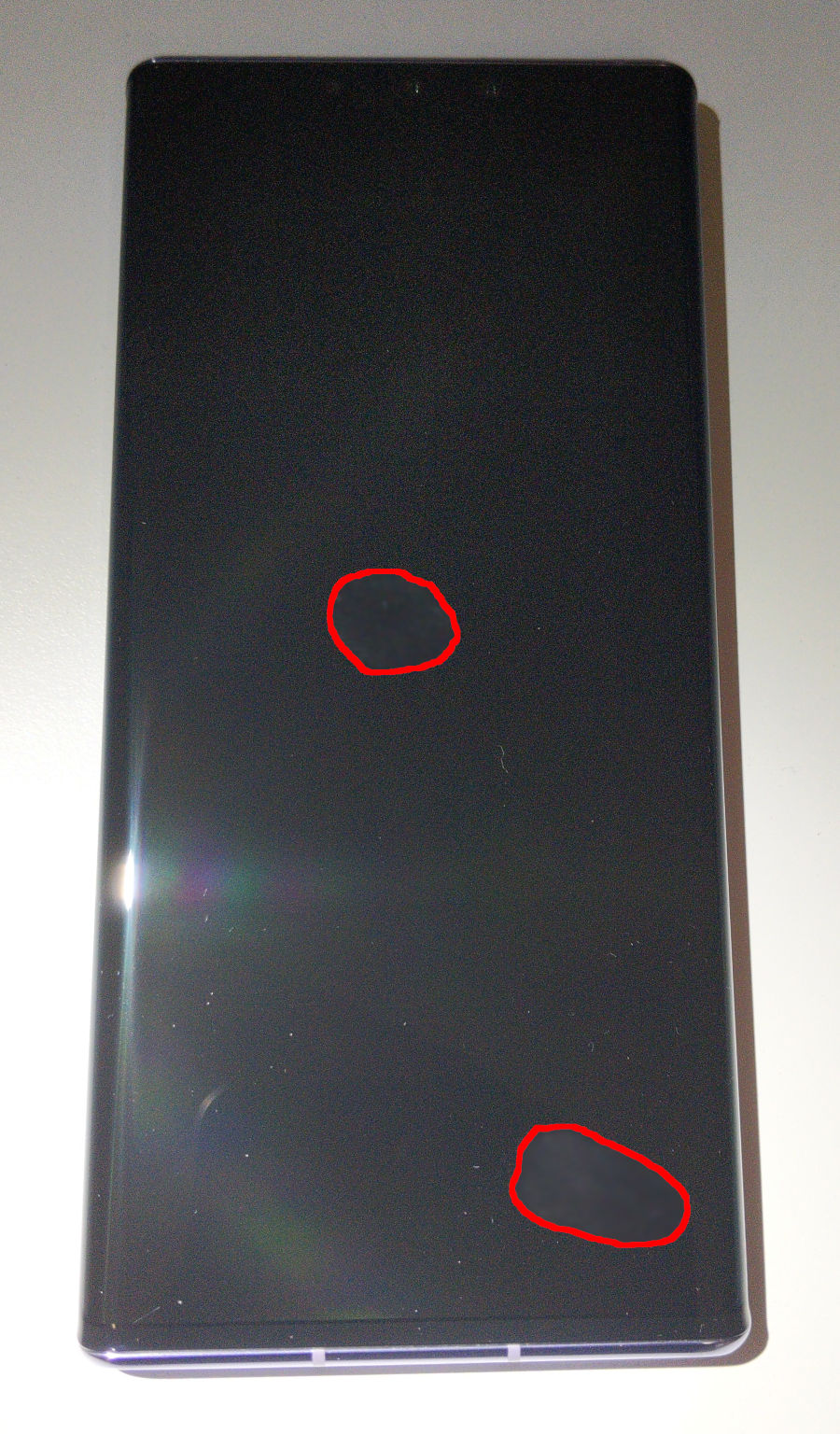

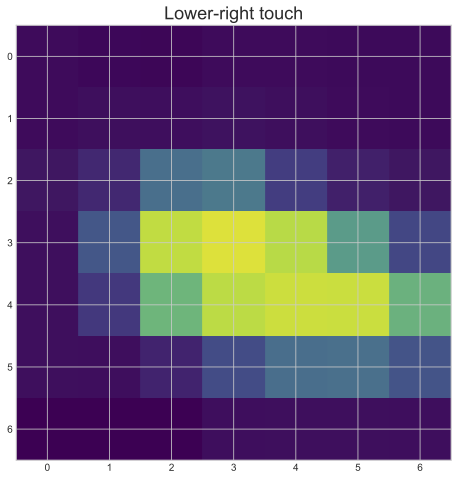

Different imprints on different locations

We demonstrate this effect in a very simple experiment by leaving one fingerprint of a touch event at the middle of the screen and one on the lower-right corner while holding the phone in the right hand, supporting by 4 fingers and using the thumb for the touch. As we can see in the visualization, there is a discernable difference! (The fingerprints on the screen are blurred for privacy reasons but highlighted for better visibility. The mathematical transformations used to generate visualization from the data sets is described in more detail in the next section.)

So we got an experimental result that said that the touch data could be correlated to the location of the touch. In the next section we go deeper into steps to refine the precision in order to show that inference success is not only anecdotal, but practical.

Touch event targets: PINs and keystrokes

Before trying to create statistical or neural network based models, we first had to select targets for our inference experiments. First, we looked at the default virtual keyboard and PIN pads for numerical inputs.

On-screen Keyboard

The virtual on-screen keyboard on Android is basically the only textual input method, so it is inevitably used system-wide. The key layout of on-screen keyboards are fixed (for a given keyboard localization), which opens up the possibility of a similar attack.

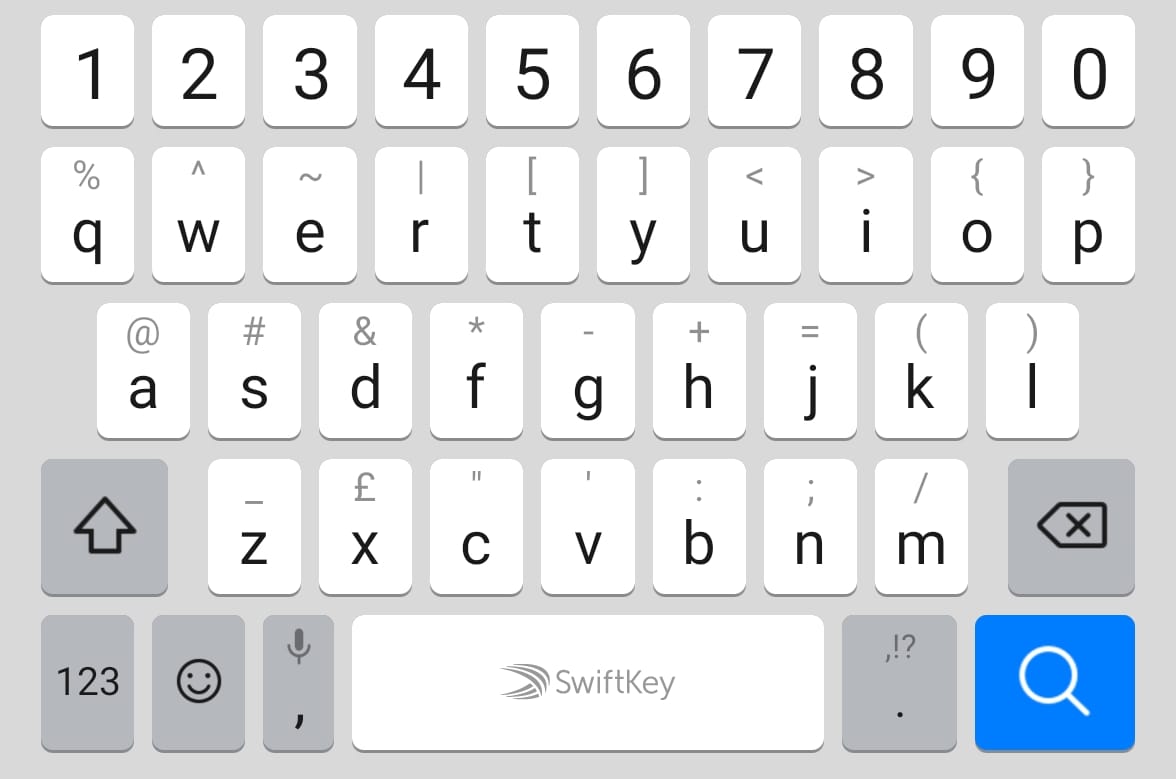

Swiftkey, the default on-screen keyboard on Huawei Smartphones}

It’s readily apparent that due to the spacing and amount of possibilities, precise inference for the keyboard from the finger imprint data alone is more challenging than for the pinpad. Our results for this are more preliminary, but they still show the feasibility of the approach at a basic level.

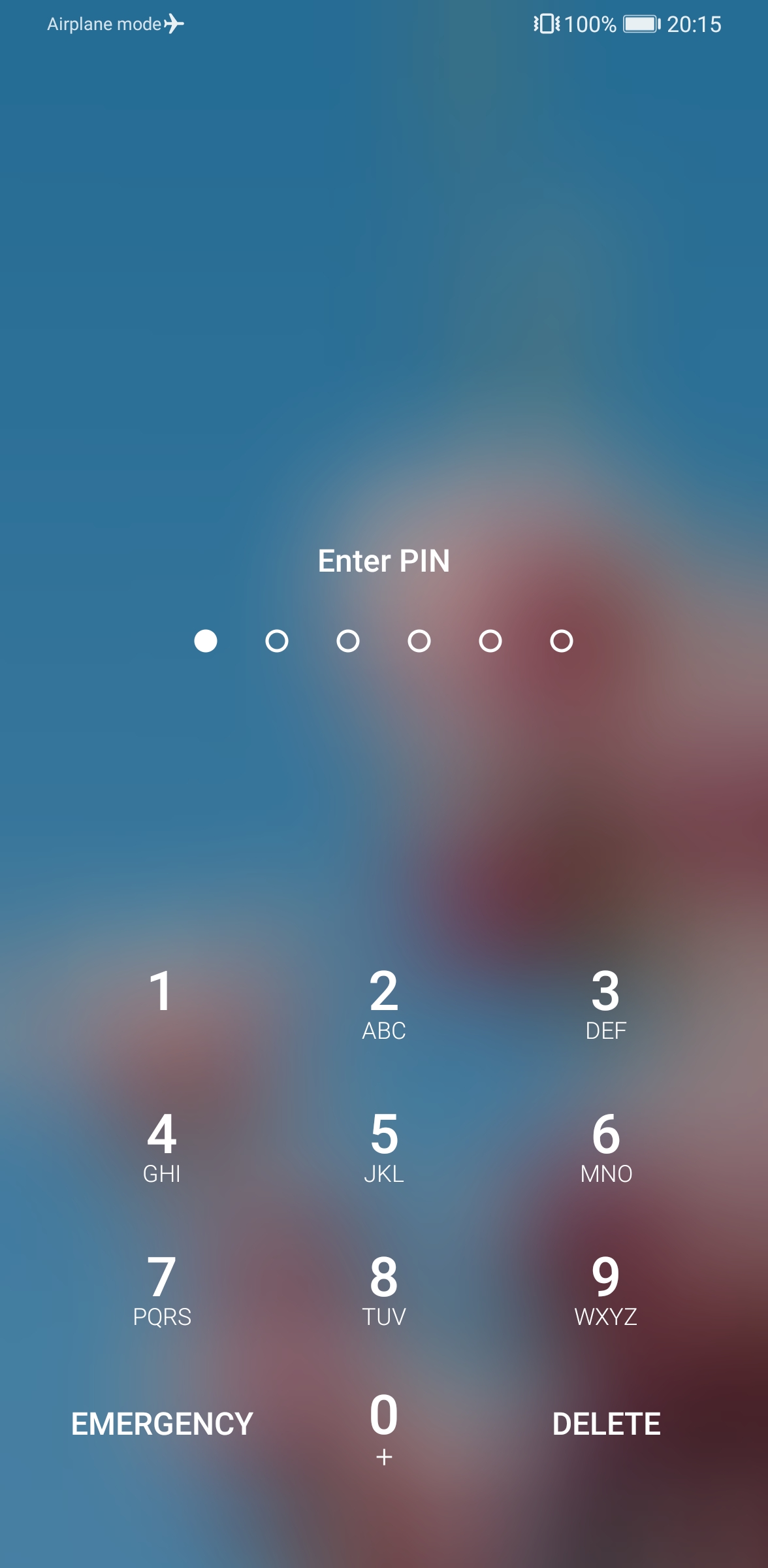

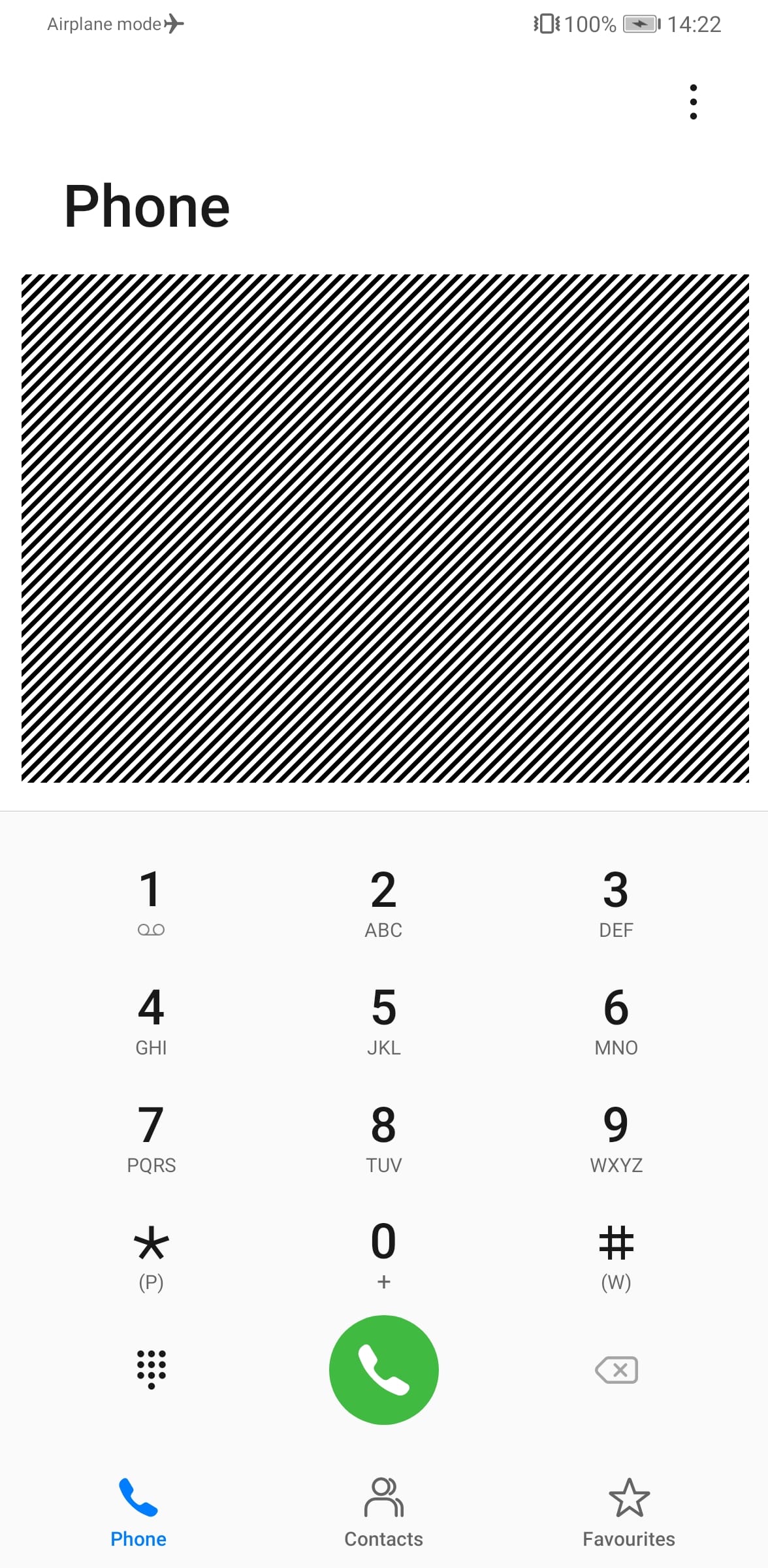

PIN Pad

There are many examples of numerical input method usage in Huawei Smartphones, such as the unlock screen or the telephone dialer. As it is demonstrated by the screenshots below, the PIN pads utilize the common 3-by-4 grid. Probably for ergonomic and usability reasons those grids are well-spaced, almost span the whole width of the phone, and take a significant portion of the height as well.

Example PIN pads of Huawei Smartphones

The 1, 4, 7, 3, 6, 9, and 0 digits are located around 1.5~cm from the edges of the screen on all of the tested devices (that is YAL, LIO and ELS). In the previous sections we empirically estimated the side length of the ROI matrix on LIO to be 3~cm. As we have mentioned above, when the ROI matrix would go beyond the physical size of the screen, the touchscreen driver fills the missing data points with constant zeros. That makes it very convenient to detect touches around the edges solely based on the ROI data.

The large screen size of recent smartphones ensures long distance-differences for a finger to touch e.g. the digit 1 versus 9 when the phone is held in the right hand (or similarly 3 and 7 for the left hand). Those distances require significant thumb movements, rotations, and stretching which will result in the distortions of the finger’s imprint.

So, empirically, it’s reasonable to assume that differences will make the touched values distinguishable. To go further, we have performed digit recognition analysis based on the ROI data in two phases. First, we used statistical analysis. Second, we used neural networks.

Statistical Analysis

We had two main objectives: firstly to show that touching a certain digit multiple times results in similar ROI data, and secondly to verify that the ROI data of different digits converge to different means.

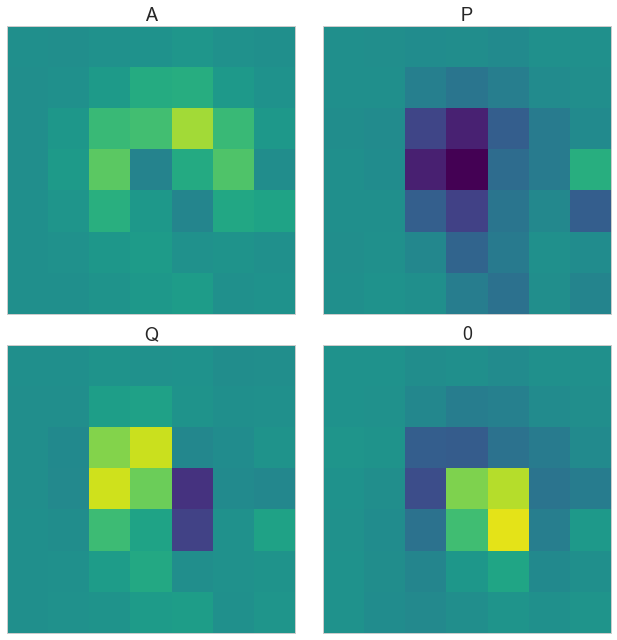

On-screen Keyboard

For the Swiftkey keyboard, we first experimented with statistical analysis for a few keys on the edges: A and Q on the left-side and 0 and P on the right-side. The data normalization was the same as with the PIN pad data (see below). The following graph shows the differences of the mean by digits to a global mean.

Differences of a global mean and the ROI data of selected keys on SwiftKey

The ROI differences are significantly distinct and this fact strongly implies that with further modelling, the keys should be detectable just by their corresponding ROI data!

PIN Pad

For the pin pad, first of all we have collected a small dataset (100 touch events per digits) of ROI data labeled by the actually touched digits. Then the raw samples (the ROI matrices) are normalized by their energy (square root of the squared sum) and averaged by labels to get a mean baseline for each digit. Those baselines are further averaged thus resulting in a global baseline.

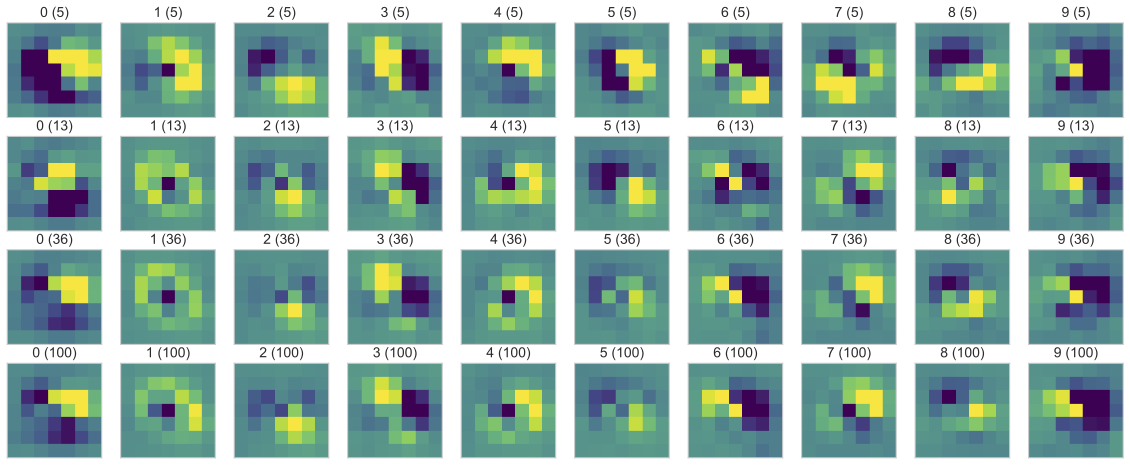

To verify the first objective, the convergence of the ROI data per digits is proved via randomly selecting different number of samples out of the normalized dataset and plotting the difference with the global mean. The figure below shows the digits in columns and going down there are more samples taken into the average computation. It clearly shows the data converges into the average. (Another way to prove this would be to show the variance inside a digit group and then between digit groups.) The current method is rather empirical, but it is visually more easy to grasp the connections this way. This tells us that touching a digit gives a reproducible ROI data.

Difference of normalized samples and baseline versus sample sizes

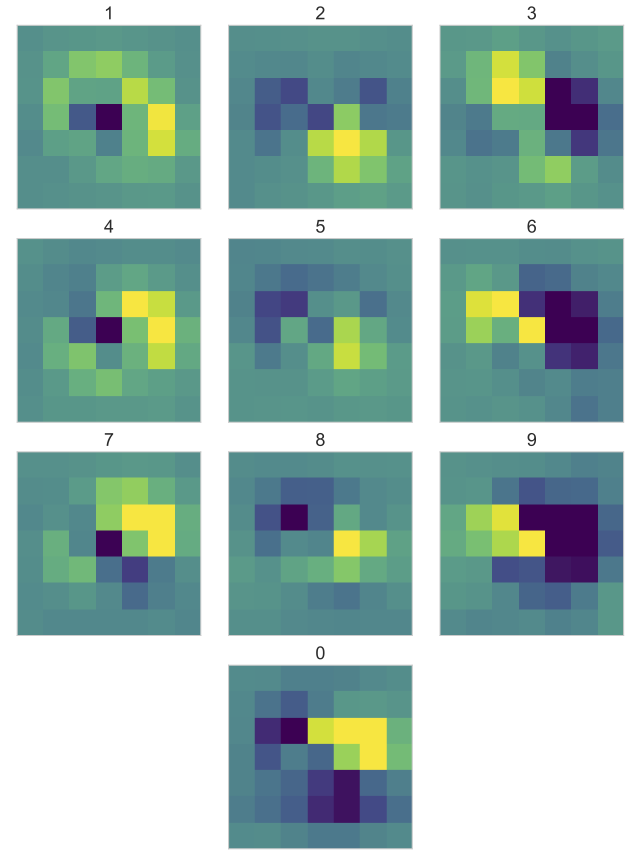

The verification of the second objective requires plotting the means by digits and comparing them with each other. The next figure shows the differences of the by-digit and the global baselines. It is easy to see that differences between the digits are quite distinct. Even by an eyeball test, it’s readily apparent that the four digit groups of 1-4-7, 2-5-8, 3-6-9, and 0 have very easily distinguishable patterns. Of course data processing can tell them apart even better. To make the precision even more apparent, we have turned to neural network processing.

Differences of mean ROI data and a global baseline grouped by digits

Neural Network Models

To provide further proof, we also conducted experiments on very simple neural network models with great results, thus we think by collecting more data and applying more advanced data cleaning methods, neural networks can be a good fit for the implementation of the mapping function between ROI data and the actual digits.

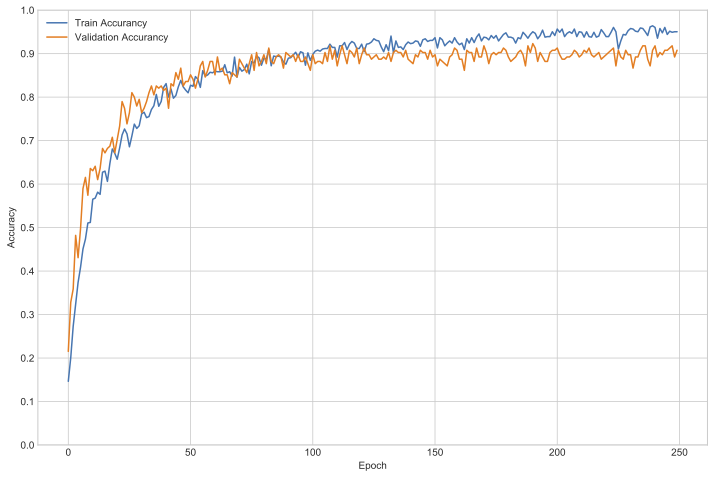

Same as for the statistical analysis, the entire neural network computation was implemented in tensorflow.

Our neural network model receives the 49 pixels of ROI data at the input layer and there are 10 output nodes at the end. In between only two fully connected layers are employed where each one has 512 nodes and uses the ReLU activation function. Our 1000-element dataset is considered to be tiny in ML territorries, and small input cases tend to be overfitted, so we also included “dropout” layers to combat this effect. For our model’s loss function we have chosen to optimize for cross entropy, as we expect a one-shot encoding on the output layer (that is for an input ROI only one of the 10 output neurons should be fired). During training, one-fifth of input data is preserved for validation purposes. Below is an excerpt from the tensorflow code!

import tensorflow as tf

print(tf.__version__)

def load_data(ratio=0.2):

train_data, train_label, test_data, test_label = [], [], [], []

for digit in range(dataset.shape[0]):

for idx in range(dataset.shape[1]):

if np.random.rand() < ratio:

# goes to test

test_data.append(dataset[digit][idx])

test_label.append(digit)

else:

# goes to train

train_data.append(dataset[digit][idx])

train_label.append(digit)

print(f"training size: {len(train_data)}")

print(f"testing size: {len(test_data)}")

return (np.array(train_data), np.array(train_label)), (np.array(test_data), np.array(test_label))

(train_data, train_label), (test_data, test_label) = load_data(ratio=0.2)

model = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(7, 7)),

tf.keras.layers.Dense(512, kernel_regularizer=tf.keras.regularizers.l2(0.0001), activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(512, kernel_regularizer=tf.keras.regularizers.l2(0.0001), activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(10)

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

history = model.fit(train_data, train_label, validation_data=(test_data, test_label), epochs=250)

model.evaluate(test_data, test_label, verbose=2);

Surprisingly such a simple model already performed quite well: we have achieved around 90% accurancy without fine-tuning the network structure or the input data! Also note that the current model solely relies on ROI data and does not account for timing information. A recurrent neural network with a memory would have been able to calculate with the inter-touch delays as well, further refining the accurancy.

Neural network training history

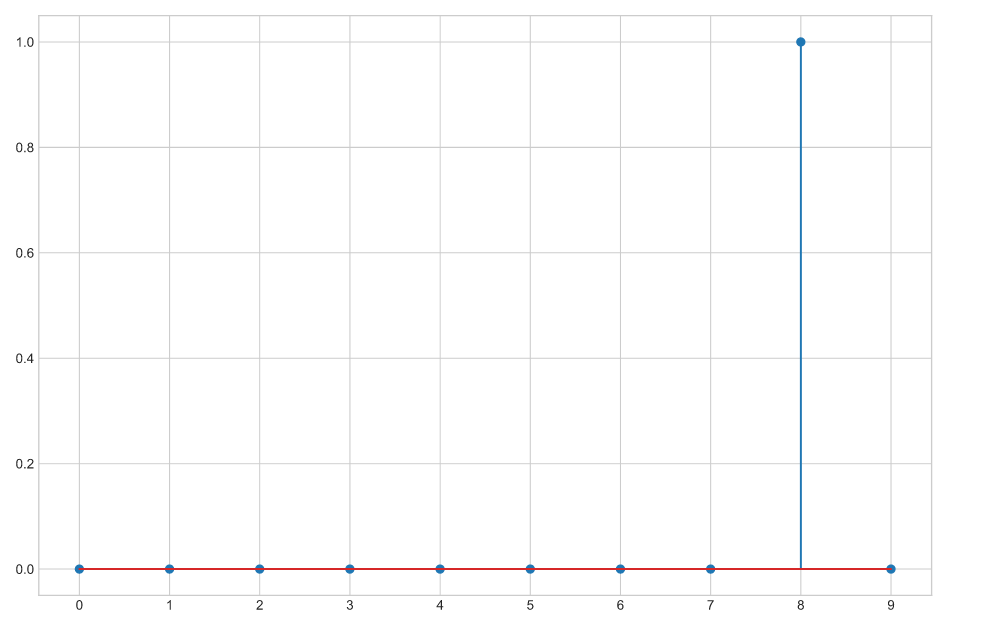

Finally, building a predictor to classify PIN pad touches and playing with it live on the phone yields the expected positive results:

probability_model = tf.keras.Sequential([model, tf.keras.layers.Softmax()])

roi = acquisition_one_shot()

pred = probability_model.predict(np.array([roi]))[0]

print('; '.join([f"{i}: {pred[i]:.2f}"for i in range(len(pred))]))

print(f"maximum: {np.argmax(pred)}")

plt.stem(pred)

plt.xticks(range(10));

Neural network classification result

To conclude, we empirically showed that ROI data can be mapped onto the PIN pad digits unambiguously!

Real-life scenarios?

Admittedly, these results are experimental. For real-life inference attacks, much precision improvement would be desired.

One thing that could be done is on-device training.

The side-channel information obtained from roi_data_internal is dependent on user traits: the ROI data will vary with hand size, handedness, and phone usage habits.

Variations can also be based in geographical or language-based characteristics.

Although it might seem difficult to develop a general algorithm that works for every victim, it would not be strictly necessary to effectively exploit this vulnerability.

A key reason is that the attacker (the malicious application) can also apply high precision on-device training to its decision algorithms.

That is because the application can easily be designed in such a way that it draws touch events from the user when the application itself is used.

Effectively an app can “learn” its user’s gestures via continuously monitoring the ROI data while knowing the ground truth through of the legitimate input fields.

Also, such behavior would be difficult to detect by any monitoring because reading roi_data_internal does not generate any kernel log messages.

(Although it is a question of detail, but thanks to the neural network cores in current generation Huawei Kirin chipsets, the actual neural network learning phase could be performed on the device as well :) )

The other parameter that could be used to refine the precision is the timing.

We have not experimented further with timing information for inferring keyboard keystrokes, but besides the imprint of the fingertip, a very precise timing information was also provided by roi_data_internal.

Because of the geometry of the keyboard, timing information also carries information value as the finger travels more distance to reach further keys, which translates to increased delay between keystrokes.

The existing prior art leads us to believe that this information could in fact be leveraged very effectively to improve the precision of the recovery of keystrokes, especially in the case of typing natural language text (as opposed to pseudorandom characters such as passwords).

Summary

In conclusion, we ended up with proof-of-concepts that demonstrate the idea that precise keystroke inference was a practical attack in this case. To Huawei’s credit, despite our POC lacking the refinement of a practical real-life implant, the PSIRT took the issue seriously and created a patch and a CVE in less than 60 days. In appreciation of that, we waited a longer time with this publication. We think that the educational value in combining NN-processing with a simple SELinux-mishap based infoleak was not diminshed by the extra time since the roll-out of the patch in question.