Additional posts in this series:

If you’ve watched my Basebanheimer talk, you will have noticed that concrete ideas for exploiting CVE-2022-21744, a heap buffer overflow in Mediatek baseband, were omitted from the talk for brevity.

This heap overflow vulnerability has an important limitation: the overwriting value is a pointer to an allocation with attacker controlled bytes.

In other words, as explained in the talk, we aren’t controlling the bytes we corrupt with directly, we write 4 byte pointer values that each point to an allocation with content controlled by the attacker.

This creates new challenges, since the Mediatek heap exploitation techniques that we disclosed in 2022 would not apply directly due to the nature of our overwrite primitive. Still, a primitive like this may still work out if we can find a suitable victim to corrupt on the heap.

A sequential write of pointer values like this can run into issues with, but also present new opportunities in, the heap allocator itself. In either case, there weren’t any public suitable techniques, so we had to look for an original solution for this baseband.

In the following, I describe my findings from researching the exploitability of this vulnerability in that context.

Mediatek CVE-2022-21744 Recap

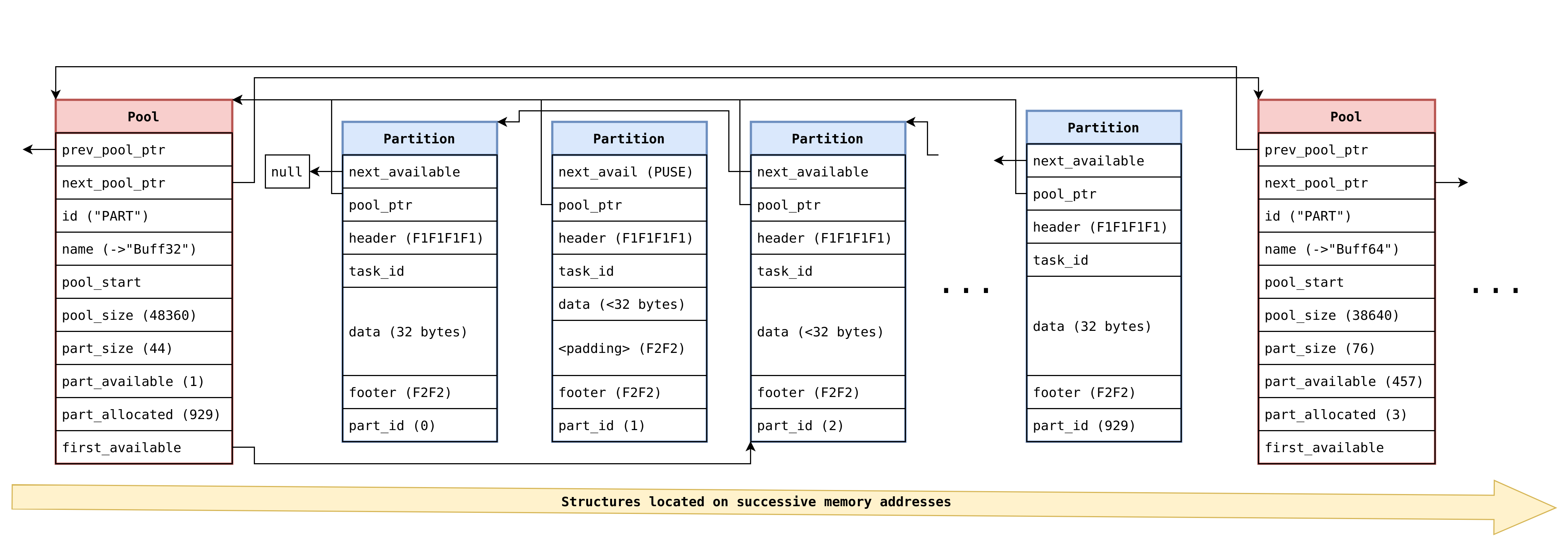

As a quick recap from our previous post, the original Nucleus OS heap implementation had the following basic structure:

- pool-based allocator (slots are called partitions)

- partition sizes are powers of 2, so allocation requests are rounded up to 32/64/128/256/512/etc bytes

- each partition comes with a minimum overhead of 20 bytes: a 16 bytes header and a footer of a partition counter (on 2 bytes) rounded up to integer bitwidth with

0xF2F2 - the entire heap itself is reserved at a fix location in the firmware memory layout (same address every time); each partition pool for each size is always at the same location, one after the other, with the partition pool descriptor structures always directly in front of the actual pool itself

- whenever the requested size itself is less than slot size minus 4, after the requested size a

F2F2F2F2repeating guard footer is appended; in other words, with a precise allocation request, these guard bytes are eliminated.

Our last research has highlighted multiple weaknesses of this implementation and explained how to create partition free list poisoning, partition-to-pool overwrite, and partition-overlap techniques to create an allocate-anywhere heap exploitation primitive.

In response, Mediatek has made several changes to the allocator! With Dimensity, we find a modified heap structure and a number of heap hardening checks added, that were designed to stop the techniques we have described.

Mediatek How2Heap, Whack-a-Mole Edition

Recap

New Heap Structure

In an apparent attempt to mitigate attacks against next_available poisoning, the implementation has been modified to eliminate this freelist entirely.

Instead, a new structure, the mer pool descriptor has been introduced.

struct mer_pool_desc_t

0x0 0x4 struct mer_pool_desc_t * struct mer_pool_desc_t * mer_pool

0x4 0x4 struct kal_pool_desc_t * struct kal_pool_desc_t * pool_desc

0x8 0x4 uint uint hdr_guard

0xc 0x4 void * void * int_ctx

0x0 0x1 char char is_ready

0x1 0x1 char pad

0x2 0x2 ushort pad

0x4 0x4 void * void * pool_start

0x8 0x4 uint uint pool_net_slot_size

0xc 0x2 ushort ushort partition_max_cnt

0xe 0x2 ushort ushort

0x10 0x1 char char lvl2_size

0x11 0x1 char pad

0x12 0x1 char pad

0x13 0x1 char pad

0x14 0x4 uint uint lvl1_bitmask

0x18 0x80 uint[32] uint[32] lvl2_bitmask

For each pool, this structure now maintains a two level bitmask array, which is used to flip the state of partitions on free/alloc and also to pick the next returned partition when allocating.

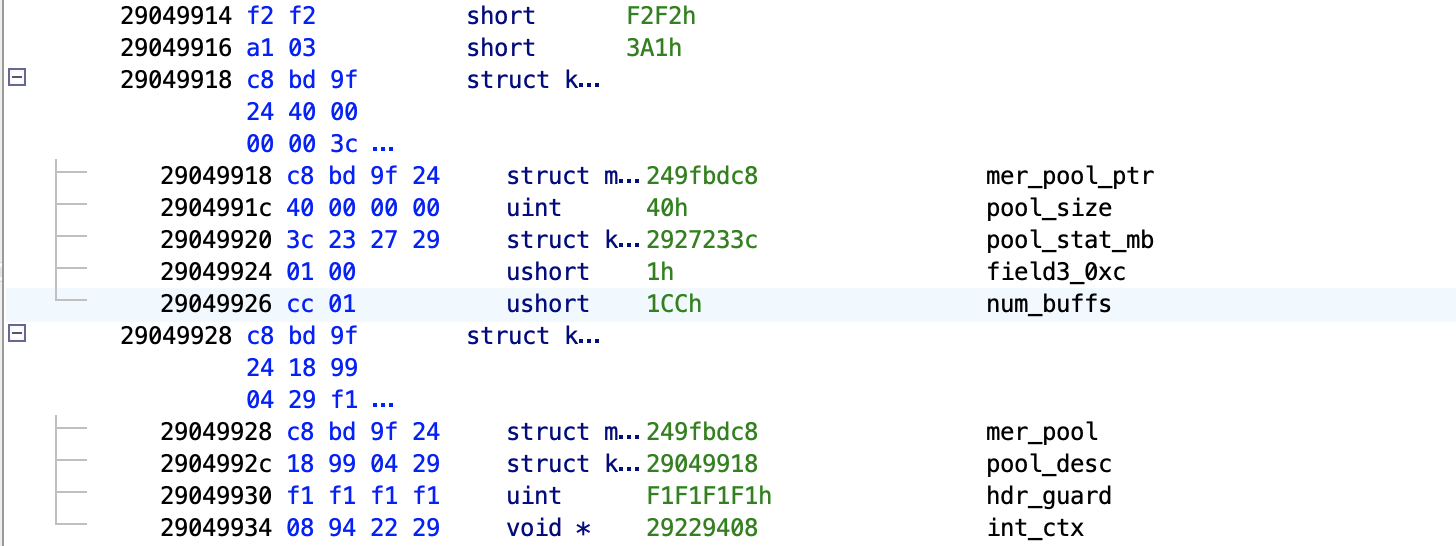

Accordingly, both the partition header and the pool descriptor has been modified. The partition header’s first field now points directly at the new mer pool descriptor instead of a next_available partition, and similarly the pool descriptor now has a completely new format which starts wih a pointer to the corresponding mer_pool_desc_t instead of the (previously unused anyway) previous and next pool pointers.

struct kal_buffer_header_t

0x0 0x4 struct mer_pool_desc_t * struct mer_pool_desc_t * mer_pool

0x4 0x4 struct kal_pool_desc_t * struct kal_pool_desc_t * pool_desc

0x8 0x4 uint uint hdr_guard

0xc 0x4 void * void * int_ctx

struct kal_pool_desc_t

0x0 0x4 struct mer_pool_desc_t * struct mer_pool_desc_t * mer_pool_ptr

0x4 0x4 uint uint pool_size

0x8 0x4 struct kal_pool_stat_t * struct kal_pool_stat_t * pool_stat_mb

0xc 0x2 ushort ushort

0xe 0x2 ushort ushort num_buffs

As an example, here is a screenshot from Ghidra of the footer of the final partition of the 32 size pool, the pool descriptor for the 64 size pool, and the header of the first partition of the later. (This Ghidra representation is based on generating a true memory view snapshot during runtime using our custom, Mediatek baseband debugger.)

As we can see, the pool descriptors are still inline, but the new mer_pool_desc_t is not stored inline on the heap.

The creation of the pools and the mer pool descriptor array can be observed in kal_os_allocate_buffer_pool, which shows that the memory is reserved for the pools with the “backend allocator” of Nucleus, kal_sys_mem_alloc.

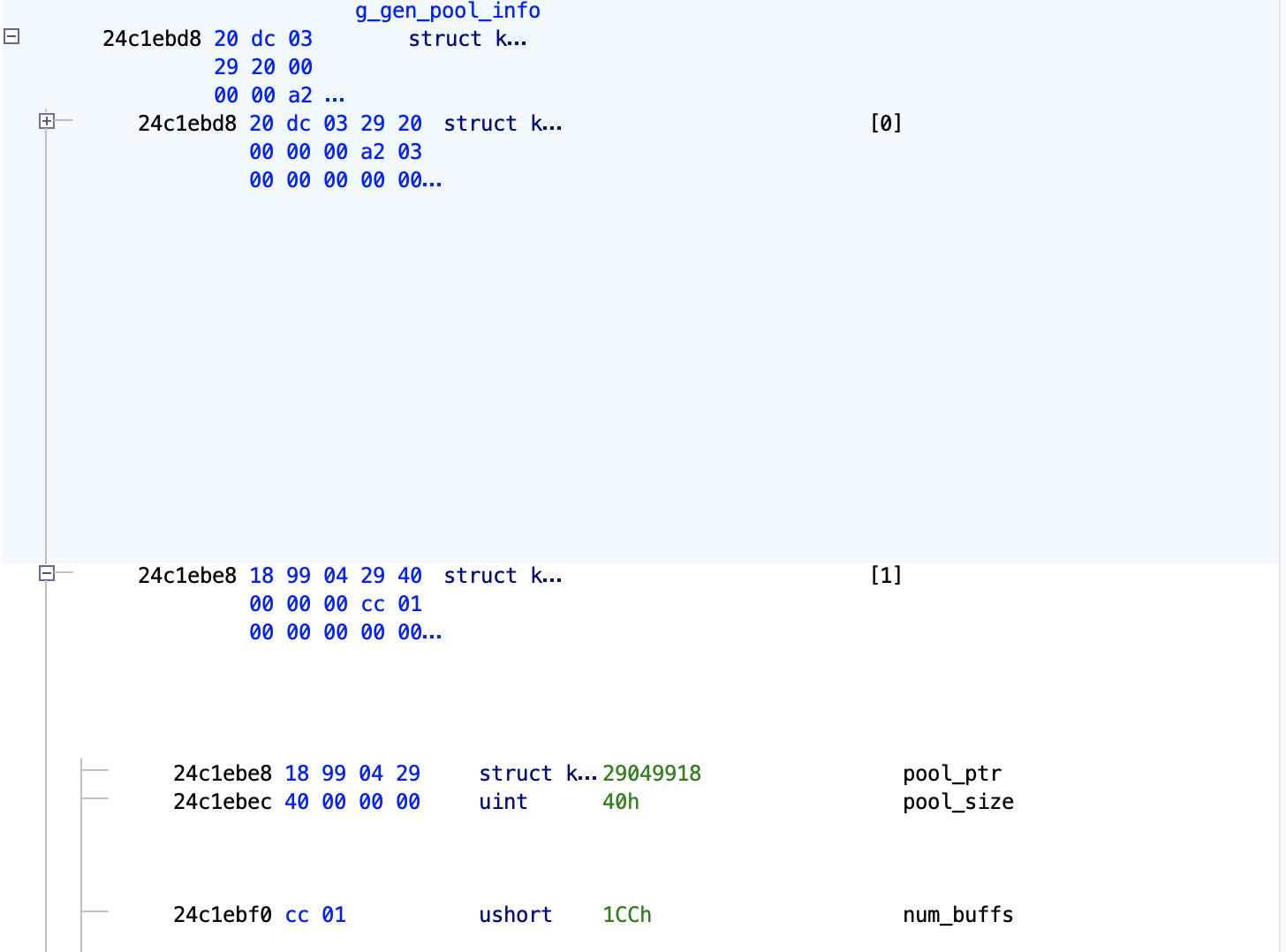

struct kal_buff_pool_info_t

0x0 0x4 struct kal_pool_desc_t * struct kal_pool_desc_t * pool_ptr

0x4 0x4 uint uint pool_size

0x8 0x2 ushort ushort partition_cnt

0xa 0x2 ushort ushort

0xc 0x4 uint uint

struct kal_pool_size_info_t

0x0 0x4 struct kal_pool_desc_t * struct kal_pool_desc_t * pool_ptr

0x4 0x4 void * void * pool_start

0x8 0x4 void * void * pool_end

To find the necessary heap management structures, a few global arrays are now used, in particular the kal_buff_pool_info_t type g_gen_pool_info which stores the start/partition size/num_buffs info for all the regular pools, and the kal_pool_size_info_t type g_poolSizeInfo. (If you are atuned to the finer details of the previous post, g_gen_pool_info is the more recent name we labeled the structure called ctrl_buff_pool_info_g in that one.)

It is a bit confusing that there are two of these arrays. Later on, it becomes clear why there are two and how one is used to select the right pool to allocate from, whereas the other is used to validate the address chosen. More on this later.

New Heap Algorithms

The heap is still used through the get_ctrl_buffer_ext() and free_ctrl_buffer_ext(). However, the steps taken to allocate a buffer from the heap and, most importantly, the sanity checks made have also changed.

For allocations, the API is a straight wrapper around get_int_ctrl_buffer(), wherer the initial selection of the pool to service a request from happens the same way as before, by walking the g_gen_pool_info array of pool information structures.

Once the pool for the requested size is chosen, __kal_get_buffer() finds a buffer to return by calling kal_os_allocate_buffer() and then validates the buffer returned with a series of checks through __kal_get_buff_num() and kal_update_buff_header_footer().

void * get_int_ctrl_buffer(uint size,uint fileId,undefined4 lineNum,undefined caller_addr)

{

int idx;

uint module_id;

void *buff;

void *pool_ptr;

struct kal_pool_desc_t *pool_desc;

struct kal_buff_pool_info_t *pPool;

if (size != 0) {

pPool = g_gen_pool_info;

idx = 0;

do {

/* note: using pool_size here makes sense because pool_size is the

"net_slot_size", without the +8+8+4. Therefore, if the alloc request size

fits, it fits, quite simply. */

if (size <= pPool->pool_size) {

pool_desc = g_gen_pool_info[idx].pool_ptr;

if (pool_desc != (struct kal_pool_desc_t *)0x0) {

module_id = stack_get_active_module_id();

buff = (void *)kal_get_buffer(pool_desc,module_id,size,fileId,lineNum);

return buff;

}

break;

}

idx = idx + 1;

pPool = pPool + 1;

} while (idx != 0xd);

}

do {

trap(1);

} while( true );

}

void * kal_get_buffer(struct kal_pool_desc_t *pool_desc,undefined4 module_id,uint size,int file,

undefined4 line)

{

uint ret;

uint int_context;

uint buff_num;

int extraout_a0;

struct kal_pool_stat_t *psVar1;

void *new_alloc;

new_alloc = (void *)0x0;

if ((pool_desc != (struct kal_pool_desc_t *)0x0) &&

(ret = kal_os_allocate_buffer(pool_desc,(int *)&new_alloc,size,file,line), ret == 0)) {

/* add +8 for headers -> there are 4 dword headers in total,

kal_os_allocate_buffer skips 2 of them already */

new_alloc = (void *)((int)new_alloc + 8);

int_context = kal_get_internal_context();

if (pool_desc == *(struct kal_pool_desc_t **)((int)new_alloc + -0xc)) {

buff_num = __kal_get_buff_num(new_alloc,(short *)0x0);

kal_update_buff_header_footer(pool_desc,new_alloc,int_context,size,buff_num);

psVar1 = pool_desc->pool_stat_mb;

psVar1->buff_stat[buff_num].field0_0x0 = int_context | 1;

kal_atomic_update_return(&psVar1->curr_allocated,1,0xffffffff);

kal_atomic_sig(&psVar1->max_allocated,extraout_a0 + 1);

kal_atomic_sig(&psVar1->max_req_size,size);

if (file != 0) {

kal_debug_update_buff_history

((struct kal_buffer_header_t *)new_alloc,int_context,1,size,file,line,

(undefined2)module_id,UserTraceData,(char)buff_num);

return new_alloc;

}

}

}

do {

trap(1);

} while( true );

}

The function kal_os_allocate_buffer() is now basically just a wrapper around the new “mer” heap implementation, which means calling mer_service_fixmem_alloc_blk(). Here, the “mer” structure to use is passed by taking it from the mer_pool_ptr field of the chosen pool’s descriptor.

As one would expect for a pool-based heap, the actual algorithm simply selects the first free slot using the two level bitmap array. (Notice how this means an important algorithmic change: the pools used to be allocated from the end, but now the bitmaps are filled in from the beginning, for consecutive allocations.)

void* mer_service_fixmem_alloc_blk

(struct mer_pool_desc_t *mer_pool,int *out_buffer,uint size_3,uint fileId,uint lineNum)

{

void* ret;

uint lvl2_slot;

uint new_lvl2_bitmask;

uint uVar1;

uint array_idx;

uint uVar2;

ret = 0xfffffffd;

if ((mer_pool->is_ready != '\0') && (ret = 0xfffffffc, mer_pool->pool_start != (void *)0x0)) {

mer_kernel_lock_take((int *)&DAT_249fb620);

uVar2 = mer_pool->lvl1_bitmask;

ret = 0xfffffffb;

uVar1 = reverse_all_bits(~uVar2);

array_idx = countLeadingZeros(uVar1);

if ((array_idx & 0xff) < (uint)(int)mer_pool->lvl2_size) {

ret = reverse_all_bits(~mer_pool->lvl2_bitmask[array_idx]);

lvl2_slot = countLeadingZeros(ret);

new_lvl2_bitmask = 1 << (lvl2_slot & 0x1f) | mer_pool->lvl2_bitmask[array_idx];

*out_buffer = (int)((int)mer_pool->pool_start +

mer_pool->pool_net_slot_size * (array_idx * 0x20 + lvl2_slot));

mer_pool->lvl2_bitmask[array_idx] = new_lvl2_bitmask;

if (new_lvl2_bitmask == 0xffffffff) {

mer_pool->lvl1_bitmask = 1 << (array_idx & 0x1f) | uVar2;

}

mer_kernel_lock_release_with_ei((undefined4 *)&DAT_249fb620);

ret = 0;

}

}

return ret;

}

For frees, free_ctrl_buffer_ext() is a straight wrapper around kal_release_buffer(), which similarly validates the buffer to be freed (using kal_get_num, kal_is_valid_buff(), and also the extra padding guard values with kal_debug_validate_buff_footer() in case the allocation wasn’t to the full partition size).

void kal_release_buffer(struct kal_buffer_header_t *address,undefined2 param_2,int file_name,

undefined4 line)

{

uint buff_slot_id;

uint internal_context;

uint ret;

struct kal_pool_desc_t *pool_desc;

ushort pool_idx;

struct kal_buff_history_node history_node;

pool_idx = 0xfeee;

if (address != (struct kal_buffer_header_t *)0x0) {

/* this verifies that the address actually falls into one of the correct pools

*/

buff_slot_id = __kal_get_buff_num(address,(short *)&pool_idx);

/* verifies:

* partition pool_ptr points to right pool

* header guard check

* paritition is within pool boundaries

* footer guard check (including correct slot for address)

ONCE AGAIN: no checking on the partition's mer pointer - but it is used in

the end!!! */

kal_is_valid_buffer(address,buff_slot_id);

pool_desc = address[-1].pool_desc;

kal_atomic_update(&pool_desc->pool_stat_mb->curr_allocated,-1,0xffffffff);

internal_context = kal_get_internal_context();

pool_desc->pool_stat_mb->buff_stat[buff_slot_id].field0_0x0 = internal_context;

__kal_debug_get_last_history_node(address,&history_node,(uint)pool_idx,buff_slot_id);

/*

this check verifies that this is a block that was last allocated, not freed

*/

if (history_node.is_alloc == '\x01') {

if (history_node.size + 4 <= pool_desc->pool_size) {

/* the actual size request is not in the partition header anywhere, so it can

only be taken from the history node. with that the potential additional

padding footer guard values can be verified correctly. */

kal_debug_validate_buff_footer(address,&history_node);

}

if (file_name != 0) {

kal_debug_update_buff_history

(address,internal_context,0,history_node.size,file_name,line,param_2,UserTraceData

,(char)buff_slot_id);

/* check the is_alloced bit */

if ((address[-1].hdr_guard >> 1 & 1) != 0) {

/* Sets whole buffer to "FREE" */

kal_set_free_pattern(address,pool_desc->pool_size);

/* sets the is_alloced bit */

address[-1].hdr_guard = address[-1].hdr_guard & 0xfffffffd;

}

ret = kal_os_deallocate_buffer((int)&address[-1].hdr_guard);

if (ret == 0) {

return;

}

}

}

}

do {

trap(1);

} while( true );

}

If checks pass, the free algorithm then uses kal_os_deallocate_buffer() to actually free the partition, which is again a wrapper around the new “mer” heap actual algorithm, provided by mer_service_fixmem_free_blk(). In the case of freeing, as one would expect, that function “simply” flips the requisite bits of the mer structure bitmaps. The algorithm here finds the mer structure to use by taking the pointer from the partition’s header.

uint mer_service_fixmem_free_blk(struct mer_pool_desc_t *mer_pool,int blk_address)

{

uint uVar1;

uint slot_id;

uint array_field;

slot_id = (uint)(blk_address - (int)mer_pool->pool_start) / mer_pool->pool_net_slot_size;

mer_kernel_lock_take((int *)&DAT_249fb620);

uVar1 = slot_id >> 5;

array_field = uVar1 & 0xff;

mer_pool->lvl1_bitmask = mer_pool->lvl1_bitmask & ~(1 << (uVar1 & 0x1f));

mer_pool->lvl2_bitmask[array_field] =

~(1 << (slot_id & 0x1f)) & mer_pool->lvl2_bitmask[array_field];

mer_kernel_lock_release_with_ei((undefined4 *)&DAT_249fb620);

return 0;

}

New Sanity Checks

The first important change, of course, is the removal of the freelist of partitions, which eliminates the freelist hijack attacks.

Second, after kal_os_allocate_buffer() returns a selected partition, its inline pool descriptor pointer is verified to point to the same pool descriptor that was selected by get_int_ctrl_buffer(), which means we couldn’t “just” manipulate allocations to return legitimate partitions from other pools.

Next, we have a new check added to __kal_get_buff_num(). This is critical and was clearly done to mitigate the alloc-anywhere primitive constructed in our previous publication.

This function not only calculates the partition slot id that the chosen buffer has (using the ((int)buff_addr - (int)pool_start) / ((g_poolSizeInfo[idx].pool_ptr)->pool_size + 20) formula) but before it does that, it uses the global g_poolSizeInfo array to verify that the chosen buffer’s address falls within the range of any valid pool. Obviously, this alone would not guarantee that the address is in the right pool, but the above check of matching the pool descriptor already is meant to assure that.

Finally, the kal_update_buff_header_footer() call verifies the guard values in the header and the footer. These values are known, but the footer includes the partition id, so it is different for each (valid) partition.

Obviously, these checks are meant to assure that we can’t manipulate the allocator to return a buffer that is misaligned or outside the legitimate pool.

On the other side, when freeing a buffer, first the buffer number is calculated again with __kal_get_buff_num(). As before, this also assures already that we can’t survive a completely “wild” free of a non-pool address.

After this, the call to kal_is_valid_buffer() is meant to verify the sanity of the buffer. Once that is passed, the pool descriptor pointer is not acquired by walking the global and finding the one that fits the (requested) size, but it is taken directly from the buffer’s header.

There are several dereferences of this structure, including double dereferencing to write fields like pool_desc->pool_stat_mb->curr_allocated and pool_desc->pool_stat_mb->buff_stat so obviously an unverified pool descriptor pointer in a freed header would lead to arbitrary address writes.

However, kal_is_valid_buffer() takes care of this, because here the pool descriptor list g_poolSizeInfo is walked until the one that has the same pool_desc address as the one in the header is found (abort on failure to match). In addition to that matching, the function also checks the header and footer guard value’s correctness, as in the allocation path.

This is not the end of checks, however, because there is yet another set of structures in play: the ringbuffers of heap events. While this would first look like it is only for debugging purposes, it is actually also used for integrity checking here.

In Mediatek’s case, these ringbuffers are not for the entire heap, but instead they are per-partition. This history array for each pool is stored in (g_poolSizeInfo[pool_idx].pool_ptr)->pool_stat_mb->buff_stat and its elements are of type struct kal_buff_history_desc_t.

Each history descriptor corresponds to one of the partition slots, so the history array is accessed with the __kal_get_buff_num() calculated history[buff_num]. Each element contains a ringbuffer that has 3 slots and contains the heap event nodes, type struct kal_buff_history_node:

0x0 0x4 uint uint context_id

0x4 0x1 char char is_alloc

0x5 0x1 char char pad

0x6 0x2 short short module_id

0x8 0x4 uint uint file

0xc 0x4 uint uint line

0x10 0x4 uint uint size

0x14 0x4 uint uint ts

As we can see, this methodology allows the algorithm to check whether the last event on the partition was an allocation and retrieve the requested size of the allocation. If the former fails, the heap aborts. Otherwise, the later is used to check whether the padding bytes have the correct guard pattern, in case padding was necessary.

Finally, the “is_alloced” flag bit of the partition header guard field itself is checked. Interestingly, if this is not set correctly, nothing happens. If it’s set correctly, than it is flipped and the entire buffer is filled with the “FREE” pattern.

In summary, a valid partition is one that:

- falls within the pool that the requested size was for

- contains 4 bytes at

buffer - 0xcthat are the correctpool_desc - contains 4 bytes at

buffer - 8that must be0xf1f1f1fX) - contains 4 bytes at

buffer + pool_desc->pool_sizethat must be0xNNNNf2f2, where the NNNN is the calculatedbuff_num << 0x10 - if it is meant to be freed, has its last partition history node as an allocation

- if it is meant to be freed and had padding bytes, has the correct

0xf2f2pattern in the padding

Lastly, I mention that in the case of all of these checks, failure results in an abort (trap(1)).

New Gaps

Finally, after all this, it’s time to discuss new attacks against the heap.

Despite eliminating partition-header freelists entirely and adding a host of new checks, the change to the “mer” implementation has an Achilles’ heel: the mer_pool_desc_t pointers of partition headers and pool descriptors are not explicitly validated by the alloc/free steps!

This is a big gap especially because those fields, as we recall, come first in both structures:

struct kal_buffer_header_t

0x0 0x4 struct mer_pool_desc_t * struct mer_pool_desc_t * mer_pool

(...)

struct kal_pool_desc_t

0x0 0x4 struct mer_pool_desc_t * struct mer_pool_desc_t * mer_pool_ptr

(...)

In addition, we find one more obvious problem: when allocating a new buffer, the selected buffer is not checked for its “in use” bit. This state is verified in frees as we have seen, but it is completely neglected during the allocation.

In other words, the check of the header guard field in kal_update_buff_header_footer() is not complete: while it attemps to verify the other bits of the guard value, it simply masks out the last nibble containing the in-use bit. (Obviously, I can’t tell whether it was the intent was missing or the mask calculation was just mis-implemented, but the result is the same regardless.)

void kal_update_buff_header_footer

(struct kal_pool_desc_t *pool_desc,struct kal_buffer_header_t *new_buffer,

void *int_context,int size,int buff_num)

{

void *end_of_chunk;

/* Masks the in_use bit away */

if ((new_buffer[-1].hdr_guard & 0xfffffff0) == 0xf1f1f1f0) {

new_buffer[-1].int_ctx = int_context;

if (*(uint *)((int)&new_buffer->mer_pool + pool_desc->pool_size) == (buff_num << 0x10 | 0xf2f2U)

) {

if (size + 4U <= pool_desc->pool_size) {

end_of_chunk = (void *)((int)&new_buffer->mer_pool + size);

*(undefined *)end_of_chunk = 0xf2;

*(undefined *)((int)end_of_chunk + 1) = 0xf2;

*(undefined *)((int)end_of_chunk + 2) = 0xf2;

*(undefined *)((int)end_of_chunk + 3) = 0xf2;

}

return;

}

}

do {

trap(1);

} while( true );

}

Armed with these observations, I’ve come up with a number of ways to create new heap exploitation primitives.

Bitflip-What-Where

If we target a free partition with the overwrite, we can corrupt its mer pool descriptor pointer. If that pointer is replaced, then the bitmap operations on its fields become a “bitflip-what-where”, since there are no constraints/checks at all on where the pointer points:

uint mer_service_fixmem_free_blk(struct mer_pool_desc_t *mer_pool,int blk_address)

{

uint uVar1;

uint slot_id;

uint array_field;

slot_id = (uint)(blk_address - (int)mer_pool->pool_start) / mer_pool->pool_net_slot_size;

mer_kernel_lock_take((int *)&DAT_249fb620);

uVar1 = slot_id >> 5;

array_field = uVar1 & 0xff;

/*

bit flips at an arbitrary address */

mer_pool->lvl1_bitmask = mer_pool->lvl1_bitmask & ~(1 << (uVar1 & 0x1f));

mer_pool->lvl2_bitmask[array_field] =

~(1 << (slot_id & 0x1f)) & mer_pool->lvl2_bitmask[array_field];

mer_kernel_lock_release_with_ei((undefined4 *)&DAT_249fb620);

return 0;

}

What’s nice is that - as it was explained in the above - none of the other steps of the free algorithm rely on this mer pointer field of the partition that is freed, so this bitflipping will not have any other side-effects, the free will just succeed otherwise.

Of course, if the same partition were to get allocated again, that would be an issue with the corrupted mer pointer. However, proper heap shaping can prevent this for an attack, since the allocation algorithm is first-free-slot-taken, not LIFO.

In the general case, this could be very powerful, since it is a bitflip-anywhere primitive.

But in our case, this generic technique doesn’t fit our CVE, since we don’t have the ability to write an arbitrary pointer value. If we corrupt the mer pointer of an adjacent partition with our heap overflow, we would get bitflips inside or adjacent to the PNCD buffer allocation itself.

Still, that’s not entirely useless either: since the PNCD heap allocations (that the overflowing pointers point to) are 0x1C bytes long, what we would get is the ability to control all fields of the mer structure until the 2nd level bitmap, but then have that array of bitmaps overlap an adjacent allocation. In other words, this primitive translates to an arbitrary chosen bitflip inside an allocation in the smallest size (0x20) pool.

struct mer_pool_desc_t

0x0 0x4 struct mer_pool_desc_t * struct mer_pool_desc_t * mer_pool

0x4 0x4 struct kal_pool_desc_t * struct kal_pool_desc_t * pool_desc

0x8 0x4 uint uint hdr_guard

0xc 0x4 void * void * int_ctx

0x0 0x1 char char is_ready

0x1 0x1 char pad

0x2 0x2 ushort pad

0x4 0x4 void * void * pool_start

0x8 0x4 uint uint pool_net_slot_size

0xc 0x2 ushort ushort partition_max_cnt

0xe 0x2 ushort ushort

0x10 0x1 char char lvl2_size

0x11 0x1 char pad

0x12 0x1 char pad

0x13 0x1 char pad

0x14 0x4 uint uint lvl1_bitmask

0x18 0x80 uint[32] uint[32] lvl2_bitmask

In fact, since the PNCD overflow can go as far as we want it to, we could trigger this on multiple adjacent allocated partitions, which means multiple chosen bitflips inside allocations in the 32 pool.

So the primitive is not harmless even with our limited overwrite, but it’s definitely not as powerful as we would like or as it would be with an overflow where the bytes written are fully controlled.

If we look at the allocation side of the heap for attack techniques, it is easy to see now that the same “corrupt the mer pointer to get an arbitrary bitflip” trick could be viable here as well, but there are a couple differences:

- One, unlike the free path, the mer structure pointer will be taken from the pool descriptor, so the overflow needs to corrupt the adjacent pool’s descriptor instead of “only” the adjacent partition’s descriptor. This is now actually easier to accomplish than it was before, since pools are now filled in from the start. That makes it possible (especially with a spraying primitive) to assure that all the overflowing from our buffer only goes into “no man’s land” free partitions before it reaches the end and corrupts the next pool’s descriptor.

- Two, the bitflipping that happens in the alloc case, unlike the free case, has a huge side-effect of course: it immediately returns an (arbitrary) value as the chosen buffer. And as we know, this would likely cause an abort.

Return of the Allocate-Anywhere

Before I look at something else, it’s still worth considering how this bitflip primitive can be exploited in the general case!

In fact, we don’t have to look outside the heap to find a great victim for this. If we bitflip one of the pool boundary values in the g_poolSizeInfo array, we circumvent the check that is meant to prevent the “allocate-from-anywhere” pattern!

Then, a subsequent trigger of the same overflow causing a subsequent partition free to be corrupted can now point to a fully attacker-controlled mer structure and achieve the return of the freely chosen address.

Of course, because the additional allocation checks verify guard values in the header and footer, we couldn’t force any arbitrary address to be a returned allocation buffer, but there would still be plenty of opportunities on the stack and in global memory for areas where we have control of values adjacent to something that we want to target. Keep in mind that allocation sizes go up into the thousands, so “adjacent” is not all that restrictive.

Return of the Misaligned Allocations

Before I describe the last attack I came up with, which needs no additional control of values, I mention briefly that the previous “misaligned alloc” attack can still be revived with enough control of values written, but that requires a more powerful overflow primitive in the first place.

While it doesn’t fit this CVE, as a thought exercise, I make the following observations:

- oveflowing into the pool descriptor boundary and corrupting the size field of the pool can manipulate the calculated

buff_numinto anything, - controlling not just a pool’s mer pointer but also a (valid or fake) partition’s pool descriptor pointer and guard values, we can make any partition of a different-then-intended-for-the-size-request pool OR a misaligned location inside a partition be accepted as a valid partition anyway

All that is viable and can get the attacker the ability to corrupt the data of another live allocation, but it really needs much better control over overwriting values than this CVE had.

The good news is, there’s a much simpler and more powerful way to take over other allocations!

Double-Alloc-Anything

As I’ve already described, for the allocation case if we want to corrupt the mer descriptor pointer, we have to overwrite the adjacent pool’s descriptor.

Again, as I already outlined, due the the first-free-slot-taken nature of the pool selection algorithm now, and the fact that this pointer is the very first field of the pool descriptor, this is quite a practical attack with our CVE’s corruption primitive.

On the other hand, we know that we need the fake mer pool descriptor (which is the data of the PNCD allocation that we conrol) to return an actual valid partition, otherwise the subsequent checks abort.

However, we have also observed that the “is alloced” bit is neglected! This means that, by fully controlling all the values of the mer descriptor, including the 1st level bitmap, we CAN return a perfectly legitimate partition that is actually busy and not free at the time!

Long last, we have a nice primitive: a more or less perfect UAF. We can now select any allocation that goes into the adjacent pool and create a situation where we’ll be able to allocate “over it”.

Next Up: Victim Allocations

We have now crafted a powerful and generic exploit primitive from our limited control heap overflow by attacking the heap implementation itself. We could take over any already allocated partition in the targeted pool, in other words, create the equivalent of a strong UAF for any allocation that falls into the given pool.

At this stage, I have actually spent a considerable amount of time searching for victims to use in the pool classes that were relevant for this CVE.

For a number of reasons, finding a good one is not exactly straightforward in the Mediatek baseband:

- the 2G codebase is written in C, there are no C++ virtual class object instances providing convenient targets;

- the vast majority of task context descriptor structure instances don’t live on the heap;

- the classic target of event/timer objects are also out: these would happen to provide perfect targets due to their structure layout, but they get their own separate pool, which is not part of the “normal” list of per-size pools, and since all pool allocations happen at init time and are final, a “cross-cache” style approach is not feasible.

Nonetheless, I eventually found suitable options for heap shaping and victim allocations!

In the next edition of this series, we are going to look at identifying victim allocations and I’ll share my findings.

Stay tuned for upcoming releases!